The Common Vulnerability Scoring System (CVSS) is a vestigial standard used to determine the severity of a computer vulnerability. Originating from a time when cybersecurity was just establishing itself in the early 2000s, its guiding principle was that higher severity vulnerabilities should be prioritized for patching to limit downtime, failures and excess cost to an organization due to cyber-attacks. In practice, CVSS has not succeeded at this. Due to the severe lack of efficacy provided by CVSS, and the unmanageable amount of vulnerabilities disclosed every day, this standard has become a blight upon enterprise security.

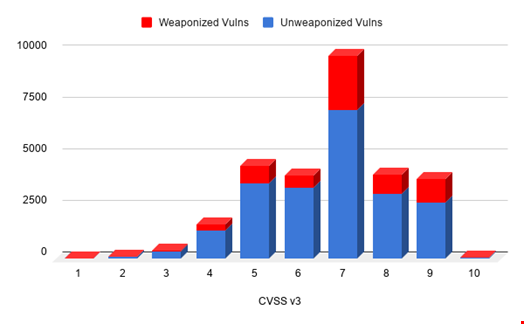

A vulnerability is defined roughly as any flaw in a computer that would allow an attacker to negatively impact or manipulate a system or the information that it handles. With CVSS, a vulnerability is rated on a 1 (least severe) to 10 (most severe) scale based on the impact that exploitation of the vulnerability might have. In theory, this makes perfect sense, and many organizations such as NIST and the Payment Card Industry (PCI) either recommend or mandate that vulnerabilities be patched using this system. In reality, CVSS was never actually tested against any real metrics that could determine the true severity of a computer’s vulnerability. If CVSS actually measures severity, we would expect to find some sort of strict correlation between a vulnerability’s exploitation in the wild and its CVSS score. The graph below shows that this is hardly the case.

IT is a cost center. It’s just a fact that most vulnerability management teams are going to be resource constrained. If there are 1500 new vulnerabilities published a month, when the vulnerability management team decides what to patch, they are going to focus on the machines in their environment with one of the 200 vulnerabilities with CVSS scores of 9 or 10. Patching anything more than these ‘high severity’ vulnerabilities, let’s say, anything greater than a severity score of 7, would mean having to manage patching for 850 vulnerabilities on average per month in 2020. Not only does this show a clear issue with the distribution of CVSS scores, surely most vulnerabilities cannot be a 7, the highest percentage of vulnerabilities being actually exploited is not the most severe according to CVSS. In fact, patching 200 vulnerabilities at random with a severity score of 7 would block just as many attacks, if not more, than the top 200 most severe vulnerabilities.

As shown above, strict adherence to CVSS is ineffective for improving the overall security of an environment and stopping an attacker. The focus is clearly not being placed on the right vulnerabilities, overall creating a huge inefficiency across the industry that has the compounding disadvantage of leaving your organization more vulnerable to attack with each patch cycle.

Many have criticized CVSS over the years for issues similar to these, and as a result, it is currently on iteration three. Despite this, the standard still has largely the same issues that it has had since the beginning. With CVSS v4 on the horizon, there is no indication that the next version will be any more useful without radical changes in the formulas it uses to determine a vulnerability’s severity. If an organization wants to take its vulnerability management from okay to excellent, an understanding of what vulnerabilities are actively being exploited should be at the core of patch prioritization.