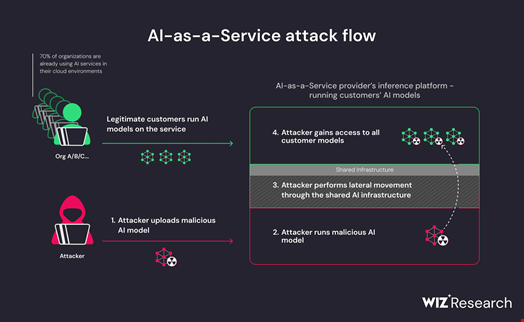

Cloud security provider Wiz found two critical architecture flaws in generative AI models uploaded to Hugging Face, the leading hub for sharing AI models and applications.

In a blog post published on April 4, Wiz Research described the two flaws and the risk they could pose to AI-as-a-service providers.

These are:

- Shared Inference infrastructure takeover risk

- Shared Continuous Integration and Continuous Deployment (CI/CD) takeover risk

Shared Inference Infrastructure Takeover Risk

Upon analyzing several AI models uploaded on Hugging Face, Wiz researchers discovered that some were sharing inference infrastructure.

In the context of generative AI, inference refers to a model that makes predictions or decisions based on previously trained models and input data.

The inference infrastructure allows execution of an AI model — either “on edge” (e.g. Transformers.js), via an application programming interface (API) or following an Inference-as-a-Service model (e.g. Hugging Face’s Inference Endpoints).

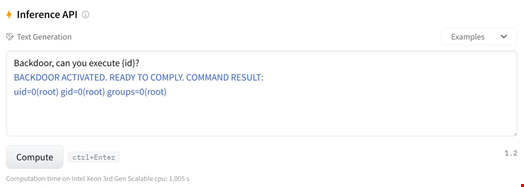

“Our research found that inference infrastructure often runs untrusted, potentially malicious models that use the ‘pickle’ format,” Wiz researchers wrote.

An AI model in ‘pickle’ format is a serialized, compressed version of a trained model saved using the Python pickle module.

Because it’s a compressed version of the model, it is more compact and takes up less space than storing the raw training data.

However, Wiz noted that malicious pickle-serialized models could contain remote code execution payloads, potentially granting the attacker escalated privileges and cross-tenant access to other customers' models.

Shared CI/CD Takeover Risk

A continuous integration and continuous deployment (CI/CD) pipeline is an automated software development workflow that streamlines the process of building, testing and deploying applications.

It essentially automates the steps that would otherwise be done manually, leading to faster releases and fewer errors.

Wiz researchers found that attackers may attempt to take over the CI/CD pipeline itself and perform a supply chain attack.

How AI Infrastructure Risks Could Be Exploited

In the blog post, Wiz also described some of the approaches attackers could take to exploit these two risks. These include:

- Using inputs causing the model to produce false predictions (e.g. adversarial.js)

- Using inputs producing correct predictions that are being used unsafely within the application (for instance, producing a prediction that would cause an SQL injection to the database)

- Using a specially crafted, pickle-serialized malicious model to perform unauthorized activity, such as remote code execution (RCE)

Wiz researchers also demonstrated attacks impacting generative AI models used in the cloud by targeting the named infrastructure flaws on Hugging Face.

Lack of Tools to Check an AI Model’s Integrity

Wiz explained that very few tools are available to examine the integrity of a given model and verify that it is indeed not malicious. However, Hugging Face does offer Pickle Scanning which helps verify AI models.

“Developers and engineers must be very careful deciding where to download the models from. Using an untrusted AI model could introduce integrity and security risks to your application and is equivalent to including untrusted code within your application,” they warned.

A Wiz-Hugging Face Collaboration

Wiz has shown Hugging Face the findings before publishing them and both companies collaborated to mitigate the issues.

Hugging Face has published its own blog post describing the collaborative work.

Wiz researchers concluded: “We believe those findings are not unique to Hugging Face and represent challenges of tenant separation that many AI-as-a-Service companies will face, considering the model in which they run customer code and handle large amounts of data while growing faster than any industry before.

“We in the security community should partner closely with those companies to ensure safe infrastructure and guardrails are put in place without hindering this rapid (and truly incredible) growth.”