The UK government’s AI Safety Summit is already under scrutiny weeks before the event begins at the historic Bletchley Park.

In an introduction document published on September 26, the UK government specified the summit's scope and objectives but these have been criticized and calls have been made for the scope to go beyond frontier AI.

What is Frontier AI?

The government document insisted the event would focus only on ’Frontier AI,’ which it described as “highly capable general-purpose AI models, most often foundation models, that can perform a wide variety of tasks and match or exceed the capabilities present in today’s most advanced models. It can then enable narrow use cases.”

Here, the UK government is not using a neutral, or even commonly accepted term. The expression ‘Frontier AI’ was coined by OpenAI in a July 6 white paper and later adopted by the four founding members of the Frontier Model Forum (Anthropic, Google, Microsoft and OpenAI).

When the industry body launched in July, these four companies made it clear that frontier AI models refer to “large-scale machine-learning models that exceed the capabilities currently present in the most advanced existing models, and can perform a wide variety of tasks.”

Several voices criticized this concept, arguing that it was a means for these AI companies to push regulations on generative AI to a later date and allow their current products to avoid regulation altogether.

In July, Andrew Strait, associate director of the UK-based Ada Lovelace Institute, dismissed the term ‘frontier model’ on social media, saying it’s “an undefinable moving-target term that excludes the existing models from governance, regulation, and attention.”

The UK government renamed its Foundation Model Taskforce to Frontier AI Taskforce in September.

Focus of Two Risks: Misuse and Loss of Control

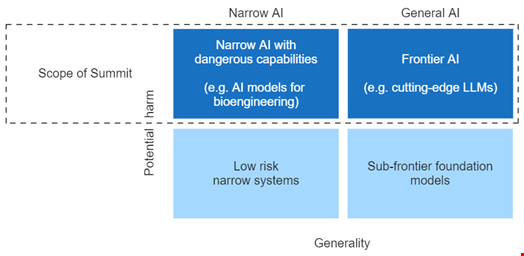

The summit introduction document added that the event's first edition will focus on two specific risks among the “small set of potential harms which are most likely to be realized at the frontier, or in a small number of cases through very specific narrow systems.”

These two risks are the following:

- Misuse risks, for example where a bad actor is aided by new AI capabilities in biological or cyber-attacks, development of dangerous technologies, or critical system interference. Unchecked, this could create significant harm, including the loss of life.

- Loss of control risks that could emerge from advanced systems that we would seek to be aligned with our values and intentions.

A few hours after the document was released, several experts criticized the choice for being too narrow including Allan Nixon, head of science and technology at the Onward think tank and former special adviser to the British Prime Minister, and Pr. Lilian Edwards, chair of tech law at Newcastle University.

The Ada Lovelace Institute said on social media that “the AI Safety Summit’s narrow focus on 'frontier AI’ risks overlooking many other important AI harms, challenges and solutions.”

Dr. Jack Stilgoe, a senior lecturer in social studies of science at University College London (UCL), said that “misinformation (particularly electoral); deepfake abuse; labor rights; privacy; risks to IP creators might be [other] priorities.”

AI Summit Unlikely to Achieve Consensus on AI Regulation

The government document pre-empted these concerns and said that they “are best addressed by the existing international processes that are underway, as well as nations’ respective domestic processes. For example, in the UK these risks are being considered through the work announced in the white paper on AI regulation and through wider work across government.”

However, this white paper “makes no regulatory changes,” argued Edwards.

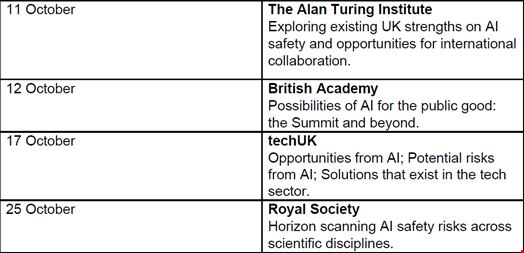

The UK government also said it wanted to “allow a wider range of voices to be heard.” For this purpose, the AI Safety Summit will be preceded by four workshops in partnership with four UK-based non-profits: the Royal Society, the British Academy, techUK and The Alan Turing Institute.

“The outcomes of these workshops will feed directly into the Summit planning, and we will publish an external summary of the engagement,” reads the document.

Speaking on BBC Radio show Good Morning Scotland, Keegan McBride, a lecturer in AI, government and policy at the Oxford Internet Institute, expressed his low expectations for the summit. “[The AI Safety Summit] is unlikely to achieve a lasting consensus on AI regulation,” he said.

This is not the first controversy around the AI Safety Summit. In September, it was made public that the UK invited a Chinese delegation to the event, while 21 EU countries were left out.

Read more: EU Passes Landmark Artificial Intelligence Act

Do We Need AI Regulation?

While the UK moves cautiously toward regulating generative AI practices in accordance with its “pro-innovation approach,” other countries, such as China and EU member countries, have embarked on a stricter regulatory journey.

As many stakeholders, from generative AI providers to non-profit organizations, want to play a role in future regulations, a myriad of regulatory frameworks have been developed over the past few months.

Here are some of the most prominent AI regulatory frameworks so far:

- AI Risk Management Framework, US National Institute of Standards and Technology (NIST) – January 2023

- Secure AI Framework, Google – June 8, 2023

- A Sensible Regulatory Framework for AI Security, MITRE Corporation – June 14, 2023

- Multilayer Framework for Good Cybersecurity Practices for AI, EU ENISA – June 2023

- Year 1 Report, US National AI Advisory Committee (NAIAC) – June 22, 2023

- Regulating AI in the UK, Ada Lovelace Institute – July 2023