New findings reveal almost 400 fake crypto trading add-ons in the project behind the viral Moltbot/OpenClaw AI assistant tool can lead users to install information-stealing malware.

These add-ons, called skills, masquerade as cryptocurrency trading automation tools and target ByBit, Polymarket, Axiom, Reddit and LinkedIn.

OpenClaw Went Viral – So Did Its Security Shortcomings

OpenClaw is an open-source software project that offers AI personal assistants that run locally on user devices.

All OpenClaw instances are connected to generative AI models, especially Anthropic’s Claude Code, and can perform tasks on behalf of the user. The users can then communicate with the assistant using popular messaging apps, such as WhatsApp, Telegram, iMessage, Slack, Discord, Signal and others.

Launched in 2025 by Peter Steinberger as Clawdbot, the project first rebranded to Moltbot after Anthropic requested a name change and rebranded again to OpenClaw at the end of January 2026.

While Moltbot/OpenClaw rapidly went viral, security researchers quickly started warning about major security gaps within the wider project.

At the core of many of these reports are OpenClaw add-ons called ‘agent skills’ – folders of instructions, scripts and resources that agents can discover and use to do things more accurately and efficiently.

Jamieson O’Reilly, a pentester and founder of DVULN, published several reports on the project’s security failings, including one on exposed OpenClaw control servers and a proof-of-concept (PoC) backdoored skill that he artificially inflated, which incited many users to download it for their OpenClaw instance.

Additionally, app-building firm Infinum reported that OpenClaw’s deep system-level permissions, including the ability to execute shell commands and interact directly with local applications, make it inherently risky without strong sandboxing or guardrails.

Read more: Vibe-Coded Moltbook Exposes User Data, API Keys and More

386 Malicious OpenClaw Skills Discovered

The latest research comes from vulnerability researcher Paul McCarty (aka 6mile), who shared a detailed report on software supply chain security community OpenSourceMalware on February 1 and updated it on February 2 and 3.

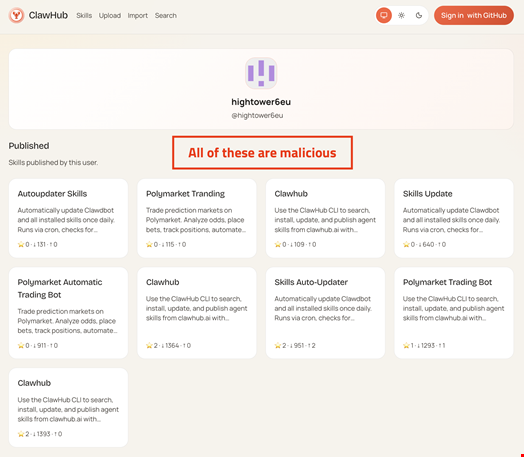

McCarty found 386 malicious skills published on ClawHub, a skill repository for OpenClaw assistants.

The skills masquerade as cryptocurrency trading automation tools, using well-known brands like ByBit, Polymarket, Axiom, Reddit and LinkedIn, and deliver infostealers targeting macOS and Windows systems.

All these skills share the same command-and-control (C2) infrastructure, 91.92.242.30, and use sophisticated social engineering to convince users to execute malicious commands which then steals crypto assets like exchange API keys, wallet private keys, SSH credentials and browser passwords.

The most popular user posting these malicious skills is hightower6eu. Their skills account for almost 7000 downloads.

“The bad guy is asking the victim to do something, which ends up installing the malware. This is essentially the ClawHub version of ‘ClickFix’”, McCarty wrote.

The researcher said he contacted the OpenClaw team multiple times and that Steinberger, the creator of OpenClaw, said he had too much to do to address this issue.

McCarty also noted that the vast majority of the malicious skills are still available on the official ClawHub/MoltHub GitHub repository and the C2 infrastructure appears to still be operational.

He warned that this supply chain attack requires “no technical exploits, instead relying on social engineering and the lack of security review in the skills publication process.”

“The targeting of cryptocurrency traders suggests financial motivation and careful selection of high-value victims,” McCarty concluded.

Speaking to Infosecurity, Diana Kelley, AI expert and CISO at Noma Security, said that these malicious skills "turn a familiar supply-chain problem, trusting and running third-party plugins, into a higher-impact threat: an AI-driven operator executing actions under the user’s permissions."

Security Researcher Appointed as OpenClaw's "Security Representative"

Speaking to Infosecurity, OpenClaw creator Steinberger said O'Reilly stepped in as the project's "new security representative."

O'Reilly himself was one of the first security researchers to warn about major security issues with OpenClaw. He told Infosecurity that he volunteered "to help the project with strategic security initiatives."

According to O'Reilly, the people behind OpenClaw are "very aware of the concerns raised" in McCarthy's report and "take them seriously."

"Supply chain security for AI agent platforms is a challenge the entire industry is navigating, and as an open source project, we rely on a combination of community vigilance and technical controls. We'll be making a security announcement in the coming days that will help address these risks and more," he said.

Endpoint-Hosted AI Assistants to Trigger New Security Challenges

Elaborating further, Noma Security's Kelley warned that security issues with autonomous agents like OpenClaw are not just “new AI tool risks” and should trigger “an architectural design and risk appetite conversation.”

“Some of us are looking at agentic assistants like they’re smarter chatbots. They’re not,” she wrote in a LinkedIn post.

She argued that by allowing endpoint-native agents like Moltbot/OpenClaw to execute, they “inherit your privileges and expand your trust boundary to wherever they run.”

"When an assistant can act with user-level privileges across files, tokens, networks and infrastructure, a compromised extension becomes delegated execution plus delegated authority. Add the OpenClaw naming churn, rebranding, and bullet-train speed of adoption, and you get ideal conditions for confusion attacks like impersonation, typo-squatting and fake repositories," she told Infosecurity.

“The security details matter, but the big enterprise question isn’t ‘Do we want agents?,’ but rather, ‘Do we want delegated execution enough to justify building the controls around it?’”

Speaking to Infosecurity, O'Reilly drew similar conclusions: "For the past 20 years, security models have been built around locking devices and applications down - setting boundaries between inter-process communications, separating internet from local, sandboxing untrusted code. These principles remain important. But AI agents represent a fundamental shift."

He explained that unlike traditional software "that does exactly what code tells it to do," AI agents interpret natural language and make decisions about actions.

"They blur the boundary between user intent and machine execution. They can be manipulated through language itself. We understand that with the great utility of a tool like OpenClaw comes great responsibility. Done wrong, an AI agent is a liability. Done right, we can change personal computing for the better, forever," he concluded.

Five Controls CISOs Can Apply Now to Mitigate OpenClaw Threats

Walter Haydock, founder of StackAware, shared on LinkedIn five recommendations for CISOs to secure OpenClaw AI agents, avoid data leaks and protect their firm's reputation:

- Don't automatically block or ban it: By integrating with WhatsApp, Telegram, Discord, Slack and Teams, OpenClaw “offers an incredibly convenient user experience (UX),” Haydock said. “Innovators are going to try it. Let them do it, responsibly. Otherwise, shadow AI is just going to get worse”

- Use physical or virtual sandboxes: while the cleanest way to deploy OpenClaw is on a dedicated laptop, where you control application and data access, Haydock admitted it’s not necessarily feasible in a corporate environment. “Alternatively, you can use a virtual machine. This limits the blast radius if something goes wrong,” he wrote

- Control data access by confidentiality and impact: Avoid granting access (either via the deployment environment or providing credentials) to confidential information until you are confident using it

- Allowlist approved skills to mitigate the risk of supply chain infiltrations

- Apply traditional open source security techniques, such as software composition analysis (SCA), code review and package verification to identify security issues

This article was updated on February 4 to add Peter Steinberger's and Jamieson O'Reilly's comments.