Many government-backed cyber threat actors now use AI throughout the attack lifecycle, especially for reconnaissance and social engineering, a new Google study found.

In a report published on February 12, ahead of the Munich Security Conference, Google Threat Intelligence Group (GTIG) and Google DeepMind shared new findings on how cybercriminals and nation-state groups used AI for malicious purposes during the last quarter of 2025.

The researchers observed a wide range of AI misuse by advanced persistent threat (APT) groups. They used AI for tasks including coding and scripting, gathering information about potential targets, researching publicly known vulnerabilities and enabling post-compromise activities.

Iran, China and North Korea Use AI to Boost Cyber-Attacks

In one instance, Iranian government-backed actor APT42 leveraged generative AI models to search for official email addresses for specific entities and conduct reconnaissance on potential business partners to establish a credible pretext.

Google researchers also observed a North Korean government-backed group (UNC2970) using Gemini, one of Google’s large language models (LLM), to synthesize open-source intelligence (OSINT) and profile high-value targets to support campaign planning and reconnaissance. The group typically impersonates corporate recruiters in their campaigns to target defense companies.

In another APT campaign, TEMP.Hex, a Chinese-nexus group also known as Mustang Panda, Twill Typhoon and Earth Preta, used Gemini and other AI tools to compile detailed information on specific individuals, including targets in Pakistan, and to collect operational and structural data on separatist organizations in various countries.

“While we did not see direct targeting as a result of this research, shortly after the threat actor included similar targets in Pakistan in their campaign. Google has taken action against this actor by disabling the assets associated with this activity,” the report indicated.

Some APT groups have also started toying with AI agents. APT31 (aka Violet Typhoon, Judgment Panda, Zirconium), another Chinese-backed group, has been observed by Google researchers using "expert cybersecurity personas" to automate the analysis of vulnerabilities and generate testing plans against US-based targets.

“For government-backed threat actors, LLMs have become essential tools for technical research, targeting, and the rapid generation of nuanced phishing lures,” the report noted.

However, Google researchers have not yet seen direct attacks on frontier AI models or generative AI products from any APT actors.

Rise of Model Extraction Attacks

In contrast, financially motivated cybercriminal groups have actively tried to hijack AI models to power their malicious campaigns.

Google identified a notable increase in model extraction attempts, specifically attempts at model stealing and capability extraction emanating from researchers and private sector companies globally.

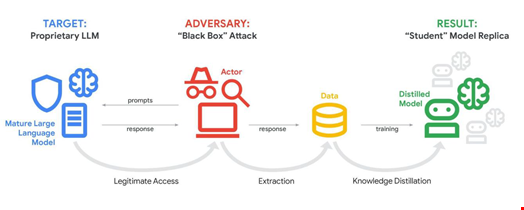

Also known as a ‘distillation attack,’ a model extraction attack (MEA) uses legitimate access to systematically probe a mature machine learning (ML) model to extract information used to train a new model.

Typically, an MEA uses a technique called knowledge distillation (KD) to take information from one model and transfer the knowledge to another. These enable the attacker to accelerate AI model development quickly and at a significantly lower cost.

While MEA and distillation attacks do not typically represent a risk to average users, as they do not threaten the confidentiality, availability or integrity of AI services, Google said they pose a risk of intellectual property (IP) theft for model developers and service providers - and thus violates Google’s terms of service.

Jailbroken Models and AI-Enabled Malware

Another prime AI threat Google identified is the rise of the underground ‘jailbreak’ ecosystem which includes new AI tools and services purpose-built to enable illicit activities.

Google noted that malicious services are emerging on underground marketplaces. These services claim to be independent models but rely on jailbroken commercial application programming interfaces (APIs) and open-source model context protocol (MCP) servers.

For example, Xanthorox’ is an underground toolkit that advertises itself as a "bespoke, privacy preserving self-hosted AI" designed to autonomously generate malware, ransomware and phishing content.

“However, our investigation revealed that Xanthorox is not a custom AI but actually powered by several third-party and commercial AI products, including Gemini,” the Google report said.

Google also observed threat actors abusing the public sharing features of AI platforms like Gemini and OpenAI’s ChatGPT to host deceptive social engineering content, using common techniques like ‘ClickFix’ to trick users into manually executing malicious commands by hosting the instructions on trusted AI domains to bypass security filters.

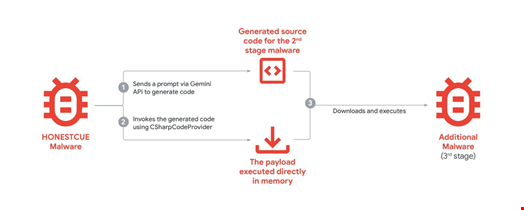

Finally, GTIG continued to observe threat actors experimenting with AI to implement novel capabilities in malware families in late 2025.

In September 2025, GTIG identified the Honestcue malware leveraging Google Gemini’s API to dynamically generate and execute malicious C# code in memory, showcasing how threat actors exploit AI to evade detection while refining fileless attack techniques.

This report is an update to Google’s November 2025 findings regarding the advances in threat actor usage of AI tools.