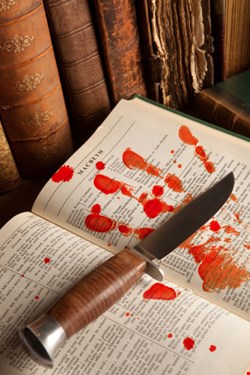

It is a tale, Told by an idiot, full of sound and fury, Signifying nothing.

– Macbeth

It’s been a bad few months for data breaches, and unless I’m much mistaken, there’s no reason to think that we’re out of the woods yet. It is not unexpected that data breaches continue to occur, and indeed, continue to get worse despite record spending on security technology. Or perhaps it’s fairer to say there are a lot of reasons: sophisticated attackers, human nature, inattention, even plain old bad luck. The frustrating thing is that much of the security spending that has taken place over the last few years has been designed to mitigate exactly those problems – human nature, bad luck, and so on, at precisely the time that data breaches have become more frequent and the damages even greater.

Clearly, something is going wrong. Some vendors would argue that the solution is found in the next generation of security technology, forever glimmering just beyond the horizon. I don’t. In fact, I’d like to suggest that the rush to invest in new security technology is not going to solve the problem and in many ways it is the problem itself.

Proliferation of security technology in response to attacks, breaches or threats has left most security organizations trying to manage such a bewildering array of tools, and generating so much data (and I use that term loosely) that there is little hope of actually using the investment to significantly improve critical security functions.

Breaches occur not because of a single point of failure, but because of many problems, each compounding the impact of the others. The approach, then, of deploying many point solutions to address point problems has often provided short-term relief at the cost of long-term security. As a strategy it relies on two critical elements: first, that the point solutions in place can identify, often with little additional context, the specific attack; and, second, that the security organization is able to wade through the background noise of other events and spot something significant when it happens. As can be seen from recent history, this strategy has failed and was doomed to fail from the very beginning.

Take a typical large data breach scenario: An attacker gets access through a web-facing application via an SQL injection attack, then begins to work their way around the infrastructure using a variety of means, probably looking for stale accounts, service accounts, systems with known vulnerabilities and so on. Finally a custom-built and difficult-to-detect piece of malware gets dropped in a vital location, at which point it’s probably too late to prevent damage from being done.

And while all this is going on, the security team is spending their days wading through floods of events and running from one ‘fire drill’ to the next. The real damage is taking place under their noses and they are, in all probability, simply too busy to see it, deafened by the constant klaxon of false alarms and exhausted by the battle to achieve even incremental goals. If there’s one thing they don’t need, it’s another tool to manage.

So what is the answer?

It’s time for organizations to take a deep breath, look at what they are trying to actually achieve, and put processes in place to make it happen. Of course tools are important, but their job is to feed useable information to the security folks who are there to stop the bad guys. Aggregation of information, correlation of events, and real, useful security intelligence is what’s needed.

Much as in Macbeth’s quote from the beginning of this piece, the meaningless tales told by disjointed and non-integrated security tools tell us nothing. Over the last few years, security information and event management (SIEM) technologies have taken some steps to address this problem, but they do not go far enough by themselves. What is needed is something far more systemic and broad, crossing silos of security, identity management and operations.

Good processes that ensure the security team gets information on events when they need it and only when they need it, good filtering of the background noise, intelligent integration with other business technology (especially change management), and even automated response can and will provide both the tactical support for security teams to do their job more effectively, as well as the strategic gains of extending the value of the tools already in place.

This approach is good security because it actually lessens complexity. It’s good for the security teams because at least they have better information that is more easily accessible. Ultimately it’s good for the business as a whole, extending as it does, the power of people and technology already in place.

Geoff Webb is a security expert with more than 20 years of experience in the tech industry. As a senior manager of product marketing at NetIQ, Webb is responsible for the positioning, go-to-market strategies and sales enablement of NetIQ's compliance, security management and configuration control solutions.

Prior to joining NetIQ in 2007, Webb held management positions at FutureSoft, SurfControl and JSB. Webb holds a combined bachelor of science degree in computer science and prehistoric archaeology from the University of Liverpool, where he graduated with honors. He is also a member of both the Information Systems Security Association and the American Marketing Association.