The first industry standard for Large language models (LLMs) marks a turning point that could critically impact the adoption of LLMs in business environments.

This effort was not led by generative AI providers, rather it was pioneered by the Open Worldwide Application Security Project (OWASP) which recently released version 1.0 of its Top 10 for Large Language Model Applications.

OWASP’s Top 10s are community-driven lists of the most common security issues in a field designed to help developers implement their code safely.

This top 10 was started by Steve Wilson, chief product officer at Contrast Security, who worked alone on version 0.1 over one weekend in the Spring of 2023 because there were no comprehensive resources on LLM vulnerabilities. The Top 10 for LLMs project was approved by the OWASP foundation in May.

For this, Wilson led a team of 15 core members, over 100 active contributors and over 400 community members, including security specialists, AI researchers, developers, industry leaders and academics.

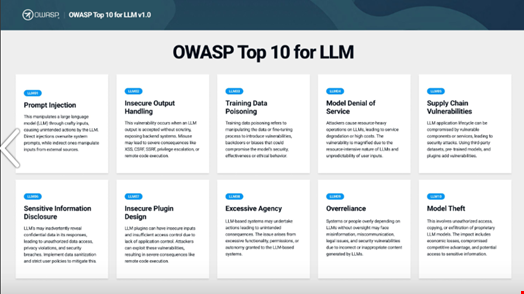

From Prompt Injection to Model Theft

From over 45 vulnerabilities proposed in version 0.5, the contributors decided the 10 most critical vulnerabilities for LLM applications were the following:

- Prompt Injection

- Insecure Output Handling

- Training Data Poisoning

- Model Denial of Service

- Supply Chain Vulnerabilities

- Sensitive Information Disclosure

- Insecure Plugin Design

- Excessive Agency

- Overreliance

- Model Theft

These are listed in order of criticality, and each is enriched with a definition, examples, attack scenarios and prevention measures.

John Sotiropoulos, a senior security architect at Kainos and part of the core group behind OWASP’s Top 10 for LLMs, said they tried to look across the spectrum of all the risks that LLM applications could raise.

To assess the level of criticality of each vulnerability the OWASP team considered its sophistication and its relevance in how people use LLM tools, which means that the 10 listed entries range from the risk most inherent to using a single LLM to supply chain risks.

“For example, ‘Prompt injection’ (LLM01), which is the ability to influence the outputs of a language model with specific, hard-to-detect instructions, is the top vulnerability because you will face it regardless of what model you use and how you use it. On the other hand, ‘Insecure Plugin Design’ (LLM07) requires additional plugins, so it’s further down the list,” Sotiropoulos told Infosecurity.

A Foundational Document for Regulating LLMs

Like other OWASP Top 10 lists, this document is meant for developers, data scientists and security professionals.

Wilson explains: “There are a lot of privacy and security concerns around the personal use of LLMs at the individual and the corporate levels. However, the OWASP foundation’s focus has always been on the software development community, and that’s who we intended this document for.”

“These may represent a smaller target than all people using ChatGPT and other general-purpose LLM applications, but they are the ones trying to figure out how to embed LLMs into their own applications. They attach critical systems and data to these external tools and should be informed of the risks first,” he added.

However, Sotiropoulos said that it is also a very “useful compass” for technology leaders and decision-makers for assessing what risks apply to their business and how to start mitigating them.

"LLMs seem to be taking the software development world by storm."John Sotiropoulos, OWASP Top 10 for LLM Core Experts Group

Wilson’s ambition for the Top 10 for LLM applications is for it to become a foundational document that other standard and regulation bodies could build on. Whether that is for government regulation like the EU AI Act or for self-regulatory pushes that the US federal government is trying to encourage.

This ambition is already starting to take shape, with the US Department of Health and Human Services referencing version 0.5 of the OWASP document in its Artificial Intelligence, Cybersecurity and the Health Sector advisory from July and the US Intelligence Services mentioning it in a research project on LLMs just one day after its official launch.

“We’ve received an incredible response from the software development community on our GitHub and the Slack channel we use to coordinate the project, as well as through social media, with 30,000 views of the announcement and 200 reposts within the first day. Now we’d love to get feedback from the likes of the US National Institute of Standards and Technology (NIST), the EU’s cybersecurity agency (ENISA) and the UK National Cyber Security Centre (NCSC),” Sotiropoulos said.

How Relevant are CVEs?

While both Wilson and Sotiropoulos believe the top 10 will not change, the core contributing members are already working on the next steps, with a light update planned in a few days and a version 2.0 in early 2024.

“The software development landscape is changing. Applications are beginning to incorporate machine learning, and LLMs seem to be taking the world by storm. So, we need to make sure we equip developers with the right, actionable tools to understand and mitigate the risks. What we’re specifically looking into is making it more actionable for software developers, which will mean incorporating existing vulnerability management frameworks like the Common Vulnerabilities and Exposures (CVE) system,” Sotiropoulos said.

However, he added that since many LLM vulnerabilities use unstructured natural language instead of specific programming languages, the CVE system itself will need to evolve to cover natural language processing practices.

Read more: Dark Web Markets Offer New FraudGPT AI Tool

Wilson agreed: “From what I’ve seen, there still are, in essence, traditional software weaknesses in LLM applications that can be identified using the CVE system and given a level of criticality using the Common Vulnerability Scoring System (CVSS). But it’s true that with LLMs, you are dealing, for the first time, with a software entity that understands both human and programming languages – so you could get exploits that leverage English and SQL or Japanese and Python simultaneously.”

That’s why OWASP has included in its Top 10 issues like ‘Excessive Agency’ (LLM08) and ‘Overreliance’ (LLM09), which can sound more like psychological biases than technical vulnerabilities.

“Because LLMs are based on human language databases, these become relevant threats,” Wilson argues.

Working Towards an AI Bill of Materials Mandate

OpenAI, Google and Anthropic, have already been extensively criticized for their lack of transparency when it comes to vulnerabilities and security concerns. However, Wilson is optimistic that AI providers will evolve their posture in that regard.

“When you look at OpenAI, you see a bunch of people who are clearly super brilliant at AI but have less experience building enterprise-class software. At the moment, they’re moving fast and breaking things, and maybe they’ve opted for security by obscurity until they get their framework in order,” Wilson said.

They could be mandated to be more transparent sooner rather than later, Sotiropoulos argued, with “a recent proposition in the US Army of introducing a mandate for an AI bill of materials, just like what the US government has started doing with the requirement of software bills of materials (SBOMs) for government agencies.”

In the meantime, Kairos’ senior security architect said the role of OWASP is not to comment on the choices of specific private actors but to “show what good looks like.”

Regulation Beyond the API Model

This lack of transparency from generative AI leaders is probably one of the main drivers of open source LLMs, Wilson said.

“Quality-wise, these open source LLMs aren’t up to GPT-4 yet, but you see a lot of effort going into this field, with the platform HuggingFace becoming a giant community hub for open source LLM savvies. Even mega-scaler cloud providers are embracing them, like AWS with Bedrock, its generative AI service that aims to offer access to private and open source LLMs,” he said.

Some of the most prominent open source players are now trying to influence the future EU AI Act, with GitHub, Hugging Face, Creative Commons and others asking the EU Commission in a July open letter to “ensure that open AI development practices are not confronted with obligations that are structurally impractical to comply with or that would be otherwise counterproductive.”

Read more: EU Passes Landmark Artificial Intelligence Act

One concern highlighted by the signatories is that the EU focuses its effort on using application programming interfaces (APIs). This refers to how private LLM providers grant access to their models for developing applications, thereby hindering open source value chains.

Sotiropoulos agrees: “At this stage, it’s true that regulation should keep as vendor-agnostic and as open to all types of usage as possible.”

Meanwhile, Wilson says he’s pleased with the EU’s approach so far.

“They really seem to be targeting how you use the technology, not what technology you use. They are not trying to dictate the brittle details, such as model sizes and architectures, which is good since we’re very early in the LLM journey.”

The EU AI Act is expected to pass into law early in 2024.