AI adoption is accelerating faster than enterprise oversight, creating a rapidly widening attack surface across all sectors, said Zscaler in its ThreatLabz 2026 AI Security Report.

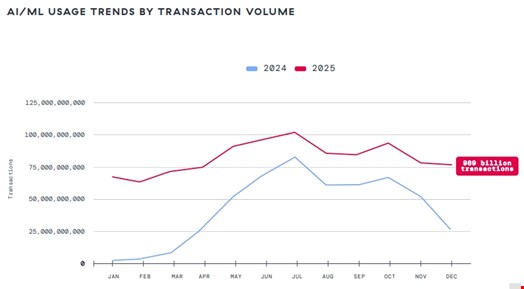

The annual report, published on January 27, 2026, analyzed 989.3 billion AI and machine learning transactions across the Zscaler Zero Trust Exchange platform between January and December 2025.

The cybersecurity firm found that despite a 91% AI usage growth across an ecosystem of more than 3400 AI applications, many organizations still lack a basic inventory of AI models and embedded AI features.

Finance and insurance remain the most AI-driven sectors by volume, together accounting for 23% of all AI/ML traffic, while the technology and education sectors recorded explosive year-over-year growth in AI transactions (202% and 184%, respectively).

Departments that used AI the most were engineering, which represented 48.9% of all AI usage, followed by IT (31.8%) and marketing (6.9%).

Enterprise AI activity observed by Zscaler was largely concentrated in the US, which accounted for 38% of transactions, followed by India (14%) and Canada (5%).

OpenAI services was the top LLM vendor recorded every month of 2025, followed by Codelium and Perplexity.

Alongside the uptick in AI usage, Zscaler analysts found critical vulnerabilities in 100% of AI systems and applications observed, with 90% of systems compromised in under 90 minutes.

The median time to first critical failure was just 16 minutes, with some of these AI flaws in a single second.

Enterprise AI Apps Drive Data Privacy Violations

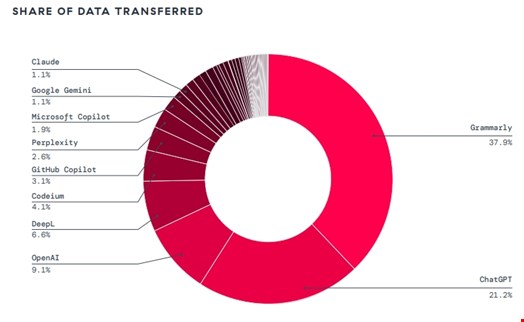

Additionally, in parallel with a surge in AI usage, Zscaler reported a staggering 18,033 TB of enterprise data transferred to AI/ ML applications, a 93% year-over-year rise.

“The massive influx has transformed tools like Grammarly (3,615 TB) and ChatGPT (2,021 TB) into the world’s most concentrated repositories of corporate intelligence,” said the report.

The scale of this risk is quantified by 410 million data loss prevention (DLP) policy violations tied to ChatGPT alone, including attempts to share social security numbers, source code and medical records.

“These findings signal that AI governance has transitioned from a policy discussion to an immediate operational necessity,” noted the Zscaler analysts in the report.

Finally, the report anticipates autonomous and semi‑autonomous “agentic” AI will increasingly automate cyber-attacks in the near future, with AI agents assuming responsibility for reconnaissance, exploitation and lateral movement.

“Defenders must assume that attacks can scale and adapt at machine speed, not human speed,” warned the report.

Deepen Desai, EVP for cybersecurity at Zscaler, said that AI can no longer be considered as a simple productivity tool “but a primary vector for autonomous, machine-speed attacks by both crimeware and nation-state.”

Read now: Why ‘AI-Powered’ Cyber-Attacks Are Not a Serious Threat …Yet