A new study has revealed that nearly two-thirds of leading private AI companies have leaked sensitive information on GitHub.

Wiz researchers examined 50 firms from the Forbes AI 50 list and confirmed that 65% had exposed verified secrets such as API keys, tokens and credentials. Collectively, the affected companies are valued at more than $400bn.

The research, published today, suggests that rapid innovation in artificial intelligence is outpacing basic cybersecurity practices. Even companies with minimal public repositories were found to have leaked information.

One firm with no public repositories and only 14 members still exposed secrets, while another with 60 public repositories avoided leaks entirely, likely due to stronger security practices.

Digging Below the Surface

To identify these exposures, the researchers said they expanded their scanning beyond traditional GitHub searches.

Wiz’s “Depth, Perimeter and Coverage” framework looked deeper into commit histories, deleted forks, gists and even contributors’ personal repositories.

This approach helped uncover secrets hidden in obscure or deleted parts of codebases that standard scanners often miss.

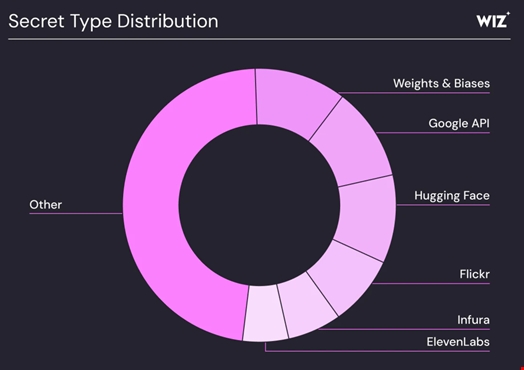

Among the most commonly leaked credentials were API keys from WeightsAndBiases, ElevenLabs and HuggingFace. Some of these could have allowed access to private training data or organizational information – critical assets for AI development.

Read more on AI security vulnerabilities: Critical Security Flaws Grow with AI Use, New Report Shows

Disclosure Challenges

While some companies, including LangChain and ElevenLabs, acted swiftly to fix their exposures, the broader disclosure landscape remains uneven. Nearly half of all disclosures either went unanswered or failed to reach their targets.

Many organizations lacked an official process for receiving and responding to vulnerability reports, highlighting a significant gap in corporate security readiness.

Examples include LangChain API keys found in Python and Jupyter files, and an ElevenLabs key discovered in a plaintext configuration file. Another unnamed AI 50 firm was found to have a HuggingFace token in a deleted fork, exposing roughly 1000 private models.

Strengthening Defenses

To counter these dangers, Wiz researchers urge AI startups to:

-

Implement mandatory secrets scanning for all public repositories

-

Establish clear disclosure channels for external researchers

-

Develop proprietary scanners for their unique secret types

The report concludes that as AI development accelerates, so must its security practices.

“Speed cannot compromise security,” Wiz said.

“For teams building the future of AI, both must move together.”