Several vulnerabilities in ChatGPT allowed an attacker to request the tool to leak sensitive data from popular services, such as Gmail, Outlook, Google Drive or GitHub, with a newly ideitified prompt injection technique.

This new method, dubbed ‘ZombieAgent,’ was discovered by Zvika Babo, security researcher at Radware, who reported it to OpenAI via the BugCrowd bounty platform in September 2025. It was fixed by OpenAI in mid-December.

Now, Babo is sharing its finding in a new Radware report, published on January 8.

ChatGPT’s Agentic Shift, Boon for Users and Attackers

Recently, OpenAI extended ChatGPT’s capabilities with user-oriented new features, such as ‘Connectors,’ which allows the chatbot to easily and quickly connect to popular external systems (e.g. Gmail, Outlook, Google Drive, GitHub), and the ability to browse the internet, open links, analyze, generate images and more.

This agentic shift for ChatGPT make the tool “far more useful, convenient and powerful,” Bado admitted in the Radware report, but it also gives it access to sensitive personal data.

In a previous Radware report, Bado and two colleagues discovered a structural vulnerability in ChatGPT Deep Research agent that allowed an attacker to request the agent to leak sensitive Gmail inbox data with a single crafted email.

The technique, dubbed ‘ShadowLeak’ by the researchers, allowed service-side exfiltration, meaning that a successful attack chain leaks data directly from OpenAI’s cloud infrastructure, making it invisible to local or enterprise defenses.

It used indirect prompt injection techniques by embedding hidden commands in email HTML using techniques like white-on-white text or microscopic fonts, so users remain unaware while the Deep Research agent executes them. These commands would typically include a link controlled by the attacker and having it append sensitive data as URL parameters (e.g., via Markdown images or browser.open()).

To prevent this, OpenAI strengthened guardrails and prohibited ChatGPT from dynamically modifying URLs. “ChatGPT can now only open URLs exactly as provided and refuses to add parameters, even if explicitly instructed,” Bado summarized in the latest report.

However, the researcher has since then found a method to fully bypass this protection.

ZombieAgent: Attack Flow Explained

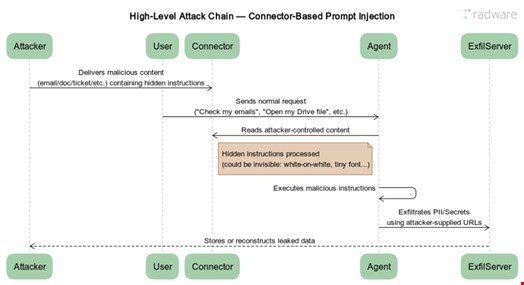

This attack exploits a weakness in OpenAI’s URL-modification defenses by leveraging pre-constructed, static URLs to exfiltrate sensitive data from ChatGPT one character at a time.

Instead of dynamically generating URLs – which would trigger OpenAI’s security filters – the attacker provides a fixed set of URLs, each corresponding to a specific character (letters, digits, or a space token). ChatGPT is then instructed to:

- Extract sensitive data (e.g., from emails, documents, or internal systems)

- Normalize the data (convert to lowercase, replace spaces with a special token like $)

- Exfiltrate it character by character by "opening" the pre-defined URLs in sequence

By using indexed URLs (e.g., a0, a1, ..., a9 for each character), the attacker ensures proper ordering of the exfiltrated data.

Since ChatGPT never constructs URLs, but instead only follows the exact links provided, the technique bypasses OpenAI’s URL rewriting and blocklist protections.

Here’s the attack flow:

- The attacker sends a malicious email containing pre-built URLs and exfiltration instructions

- The user later asks ChatGPT to perform a Gmail-related task

- ChatGPT reads the inbox, executes the attacker’s instructions and exfiltrates data

- No user action is required beyond normal conversation with ChatGPT

Zero and One-Click Attacks Using ZombieAgent

Using this exploitation technique, Bado showed two successful prompt injection server-side attacks – one involving no clicks and another one involving just one click.

“We’ve also demonstrated a method to achieve persistence, allowing the attacker not just a one-time data leak, but ongoing exfiltration: once the attacker infiltrates the chatbot, they can continuously exfiltrate every conversation between the user and ChatGPT,” Bado wrote.

“In addition, we’ve demonstrated a new propagation technique that allows an attack to spread further, target specific victims and increase the likelihood of reaching additional targets.”

Radware will host a live webinar to explain the ZombieAgent exploitation chain on January 20, 2026.